Software products based on real-time 3D reconstruction

For a long time, photogrammetry was based on analogue film, manual image orientation, and manual measurement. The time from flight to finished product was often weeks or months. Even today, when we have become accustomed to digital images and automatic processes, the photogrammetric standard processing takes minutes or hours, which inhibits many time-critical applications.

At I-CONIC, we took on the challenge to build software creating 3D models in real-time to foster new applications. However, the 3D model is not the main goal, but a means to produce geographically correct information for users who need it immediately for different types of analysis and decision-making.

Our basic technology can be used for imagery from all types of platforms, but we focus video and images from drones. Our software uses GNSS information when available, but it can be applied even with missing or corrupt GNSS data.

We make it possible through challenging some common practices in current photogrammetry:

- We use video.

- We are completely GPU focused.

- We combine advanced photogrammetric mathematics with computer vision.

- Metadata is not needed to create models (but of course for georeferencing).

- Our concept includes video from multiple and synchronised platforms, for example drone swarms.

We are creating actionable information through 3D models produced and visualised at video rate:

- Instant 3D models.

- Instant stereoscopic viewing, also from 2D video.

- Instant 3D measurements, also automatic.

- Can use video from different platforms: satellites, drones, aircraft, etc.

- Can be used for online remote monitoring and operations.

- Do not require expensive hardware.

- Complementing other technologies.

Positioning from drone video

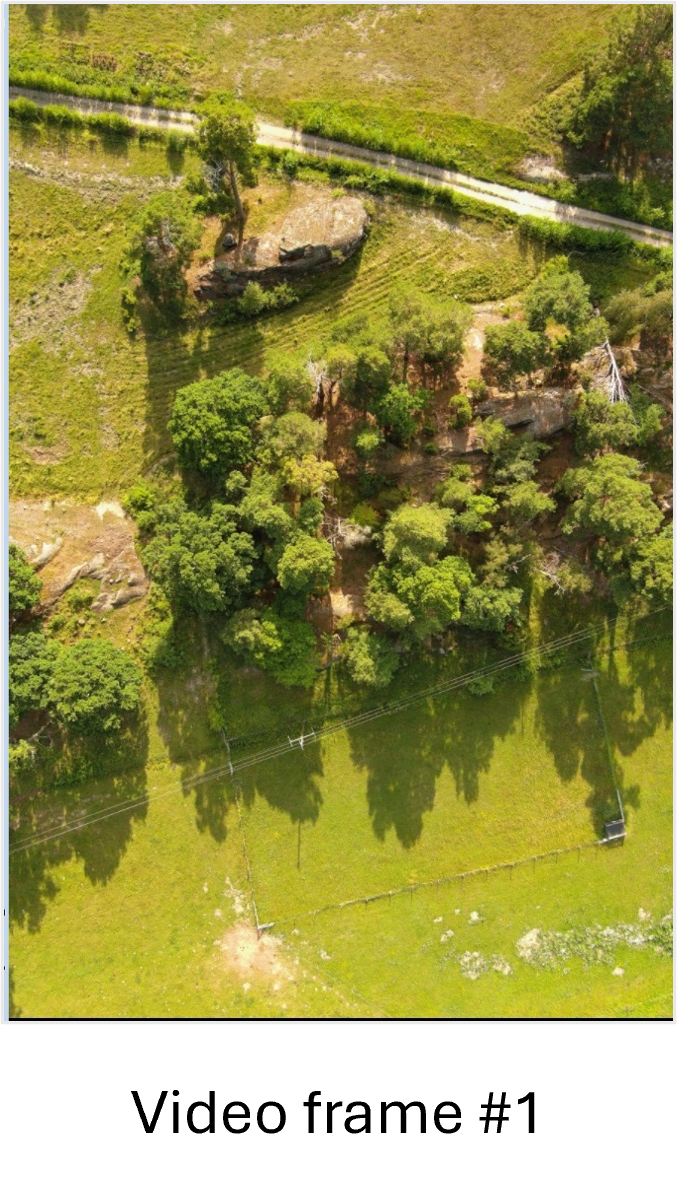

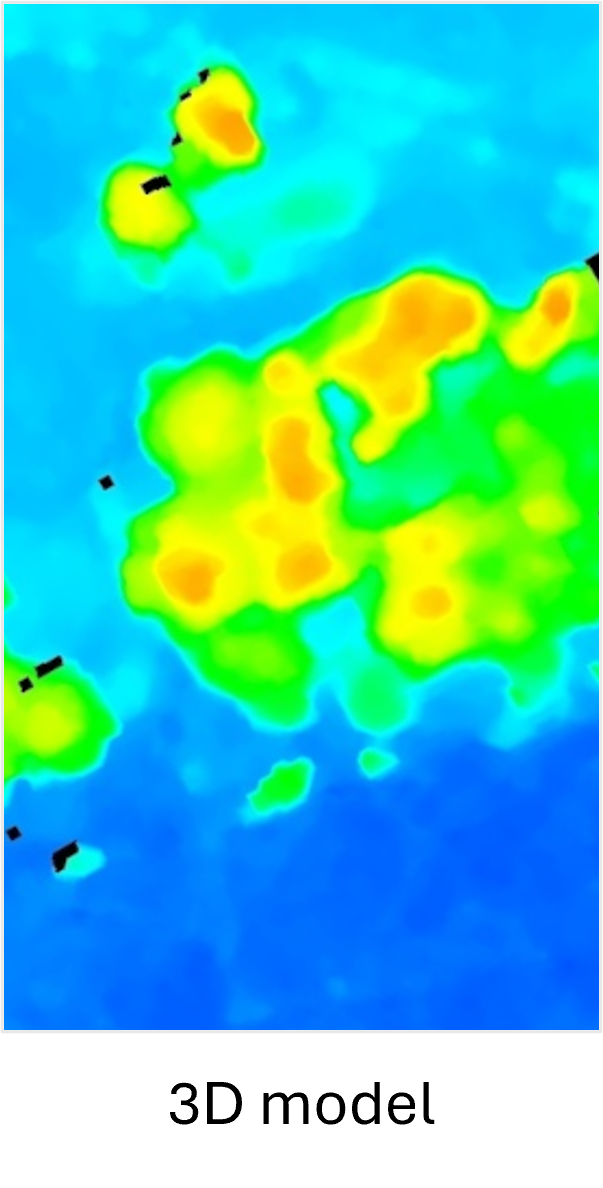

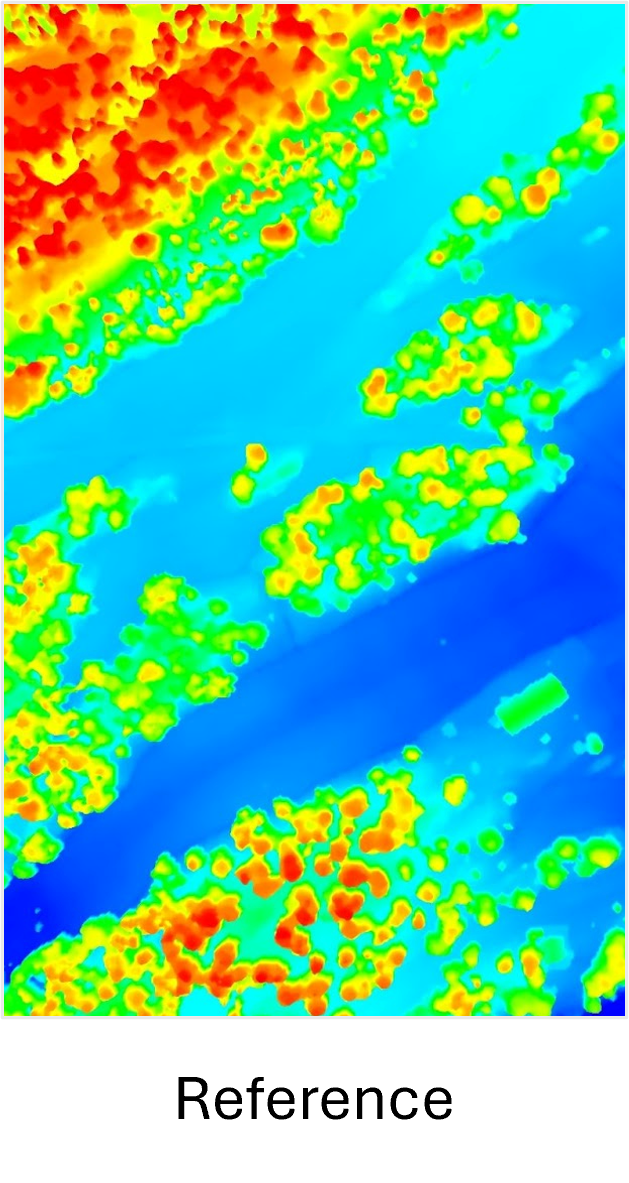

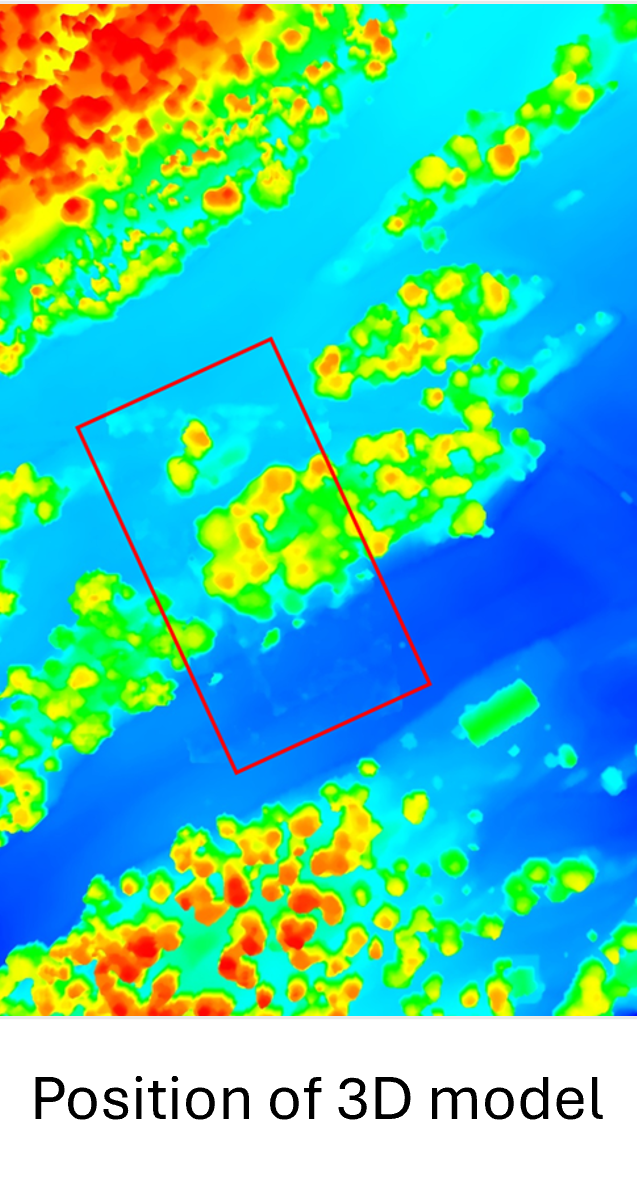

Two frames from the drone video are selected for a suitable stereo pair, and then used to calculate a 3D model (shown below as a surface model, DSM). This model is then compared to, and aligned with a geocorrect reference DSM over a larger area. The position in the reference DSM, provides the exact coordinates of the video frames, and hence also every object detected in these frames. Furthermore, the position of the drone itself can be calculated, without any information from GPS or other GNSS satellites.

The entire process takes less than 0.5 seconds, and is repeated regularly during the flight.

Normally, existing reference DSMs created from aerial photos or laser scanning are used, and such data is readily available in many countries. When not available, like often in conflict zones, it is possible to use DSMs created from satellite images, which are globally available.

Drone navigation in GNSS-denied environments is a challenge in many areas around the globe, not the least in Eastern Europe. The described solution for GNSS-free positioning can be used for autonomous drone navigation, preferably together with some kind of Inertial Navigation System (INS).

I-CONIC’s positioning concept is cost-efficient and reliable, no expensive hardware or active sensors are needed. Other solution providers are using image-to-image matching for positioning, which can work under ideal circumstances. However, the visual/image domain changes over time: shadows and light changes during the day; vegetation, snow, etc varies over the seaso). The 3D domain is very much more stable over time, and hence a better concept for GNSS-free navigation.

Real-time 3D reconstruction

The two sequences of video are taken from the same normal 2D drone video clip, but only started some seconds apart, which means that each object on the ground is seen from a different viewing angle. Each pair of frames are then used as input for the photogrammetric 3D modelling.

If everything we need to be imaged is static, we can use video from one single drone to generate 3D models from images. In one and the same video we skip a constant number of frames and create a continuous stream of pairs of frames. For example, we can create a stereo pair from frames 1 and 51, the next stereo pair from frames 2 and 52, etc. And from the stereo pairs we take all the classic photogrammetric steps (and some more) to generate block-triangulated 3D point clouds and mesh. This is not hugely different from the way 3D models are created by other types of software packages on the market today – only that we do it from video and we do it instantly (at video rate).

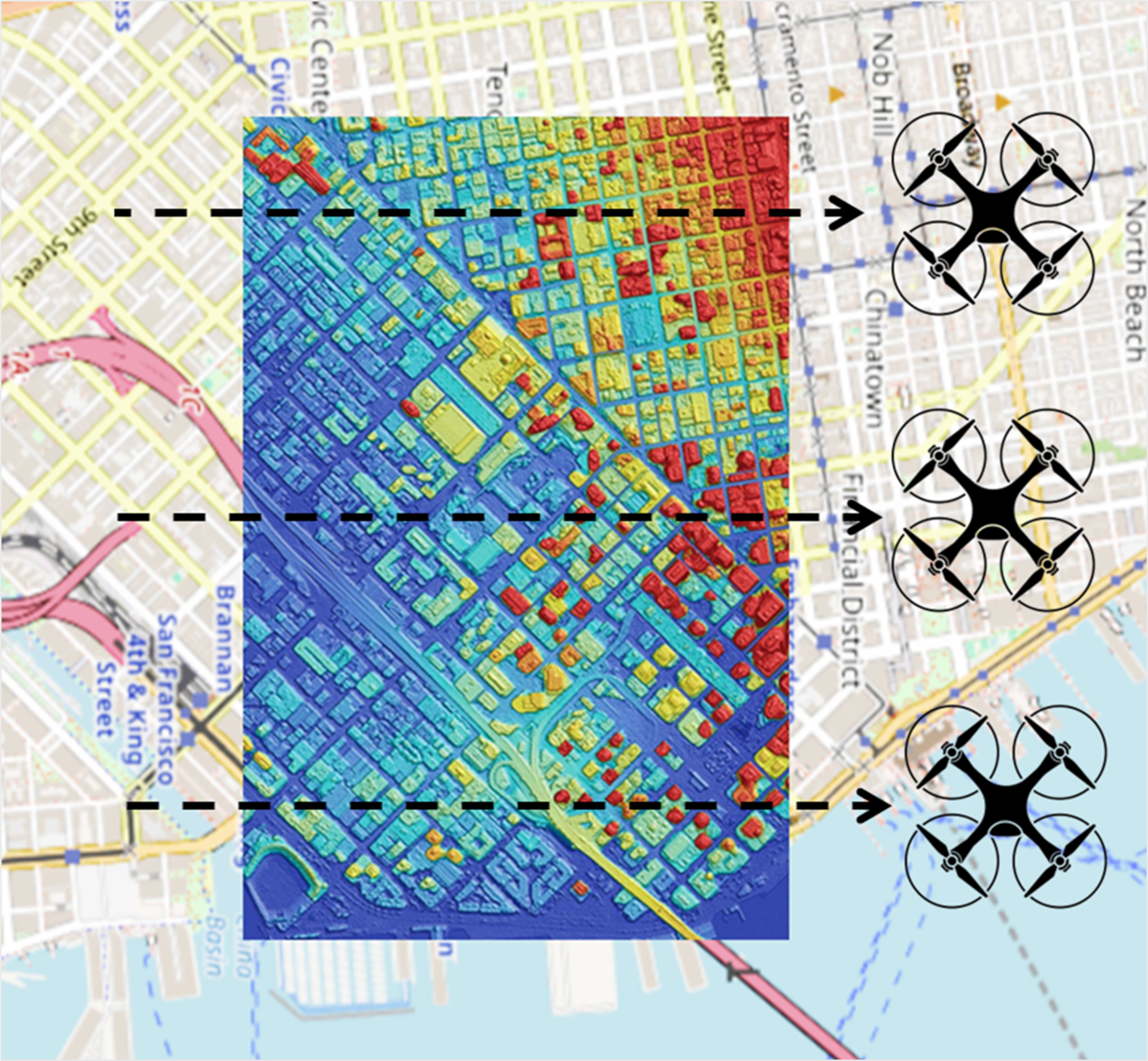

The 3D reconstruction can also be done using frames in two different video streams captured by drones flying in parallel, i.e. not only along-track as described above, but also across-track. A svarm of drones can cover the area-of-interest much faster than a single drone flying back and forth.

The Video Stereoscope

An anaglyph 3D stereo video created by I-CONIC’s Video Stereoscope. To obtain the 3D effect of this particular video you need a pair of red/cyan anaglyph glasses.

Our ability to interpret images is far better in 3D than in 2D. Anyone who has seen Avatar, Gravity or any other 3D movie knows the increased realism this provides. With the same technique as described above for static 3D models, we can process the video through so-called epipolar resampling, and thereby play a regular 2D video as a 3D video. The stereoscopic video requires the camera to move, preferably perpendicular to the camera’s image plane, as when filming from a side window of the car.

We have not seen epipolar resampling in real-time to create 3D stereoscopic video before, but we are convinced by tests with video footage from both drones and mobile cameras that this can be a useful tool, e.g. for inspection by drones or forest inventories in the field.

We call this Video Stereoscope because it works like a classic stereoscope for pictures, but shows instead a moving video in 3D. The stereoscopic viewing also requires some kind of equipment that projects the left frame of the stereo pair to the left eye, and the right frame to the right eye. Such equipment can be for example VR glasses, 3DTV or red/blue anaglyph glasses like these:

Links:

https://en.wikipedia.org/wiki/Stereoscopy

https://en.wikipedia.org/wiki/3D_stereo_view

We also invented Live 3D

3D modelling from multiple drones with synchronised video feeds makes it possible to create a 4D model – a time-dependant 3D model, where also moving and changing objects are then modelled. It is like in a computer game where you move in an animated world – but in a model of the real world, created in real-time.

We prefer to call it Live 3D, and it can also be described as a digital twin of an event, for example a complete time-dependant 3D model of a fire to be used by analysts and decision-makers in real-time, or afterwards for training, etc.

Our Live 3D concept is patented in Europe and the US.